library(autostats)

library(workflows)

library(dplyr)

library(tune)

library(rsample)

library(hardhat)

library(broom.mixed)

library(Ckmeans.1d.dp)

library(igraph)autostats provides convenient wrappers for modeling,

visualizing, and predicting using a tidy workflow. The emphasis is on

rapid iteration and quick results using an intuitive interface based off

the tibble and tidy_formula.

Prepare data

Set up the iris data set for modeling. Create dummies and any new columns before making the formula. This way the same formula can be use throughout the modeling and prediction process.

set.seed(34)

iris %>%

dplyr::as_tibble() %>%

framecleaner::create_dummies(remove_first_dummy = TRUE) -> iris1

#> 1 column(s) have become 2 dummy columns

iris1 %>%

tidy_formula(target = Petal.Length) -> petal_form

petal_form

#> Petal.Length ~ Sepal.Length + Sepal.Width + Petal.Width + species_versicolor +

#> species_virginica

#> <environment: 0x10e6d9d10>Use the rsample package to split into train and validation sets.

iris1 %>%

rsample::initial_split() -> iris_split

iris_split %>%

rsample::analysis() -> iris_train

iris_split %>%

rsample::assessment() -> iris_val

iris_split

#> <Training/Testing/Total>

#> <112/38/150>Fit boosting models and visualize

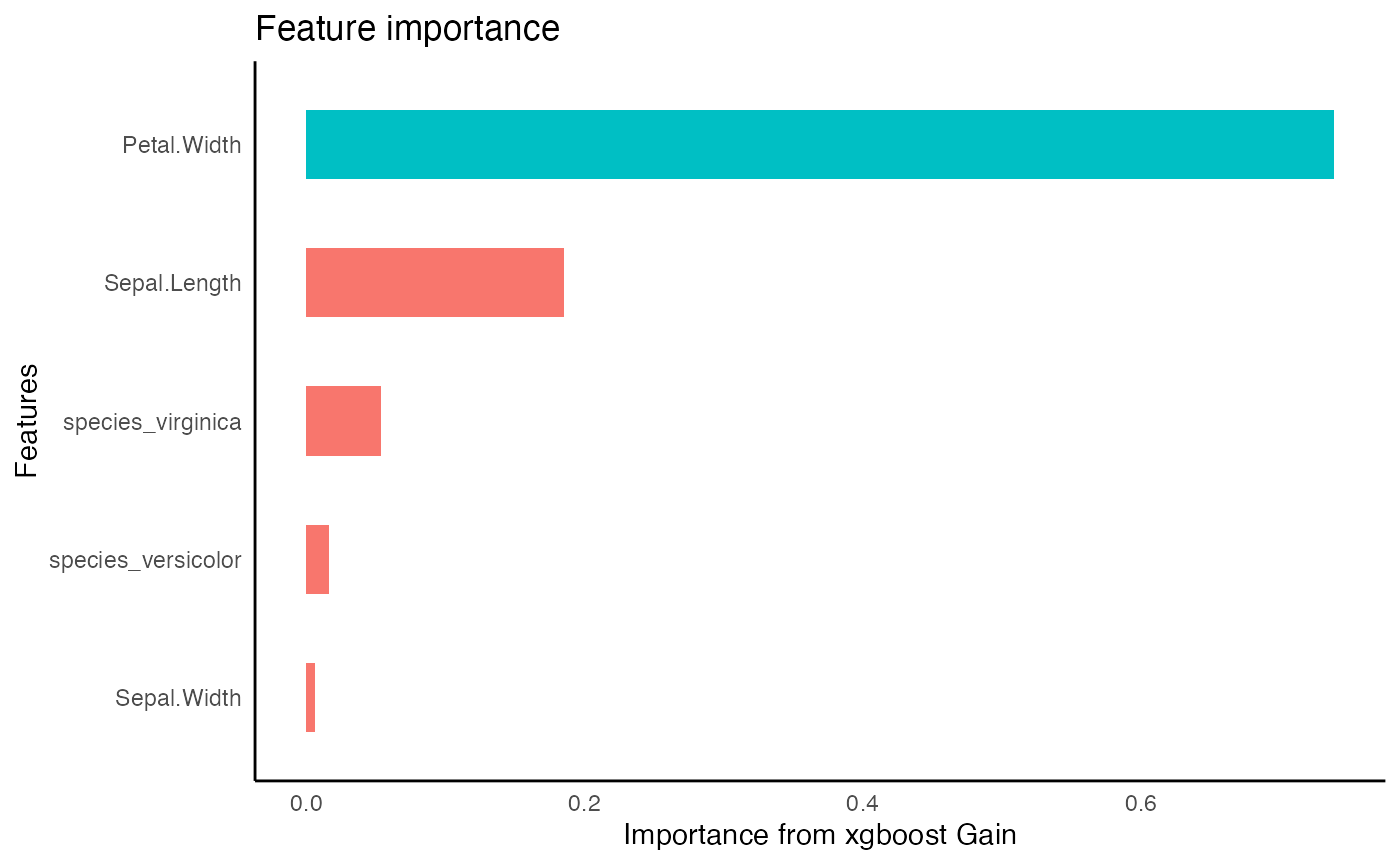

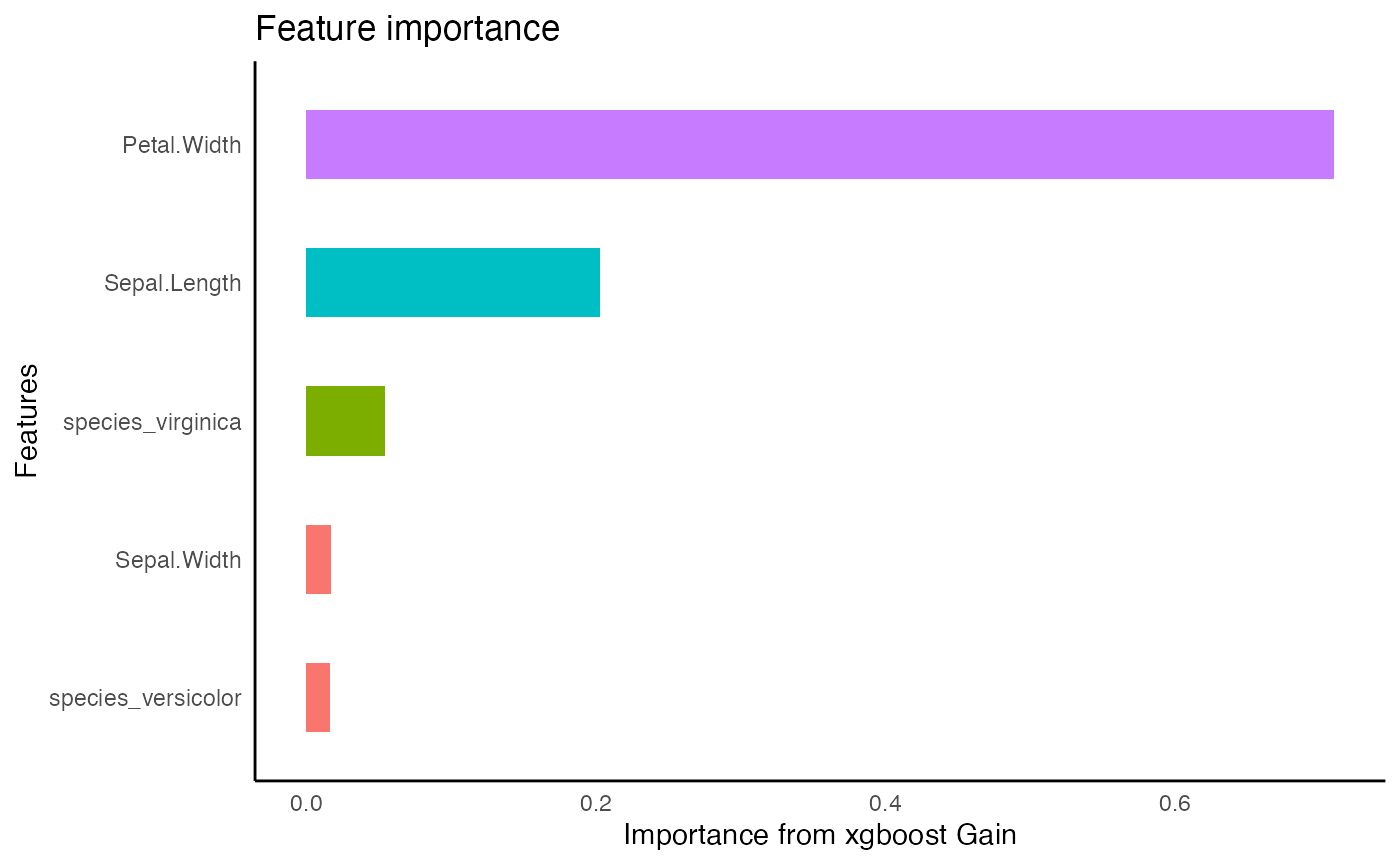

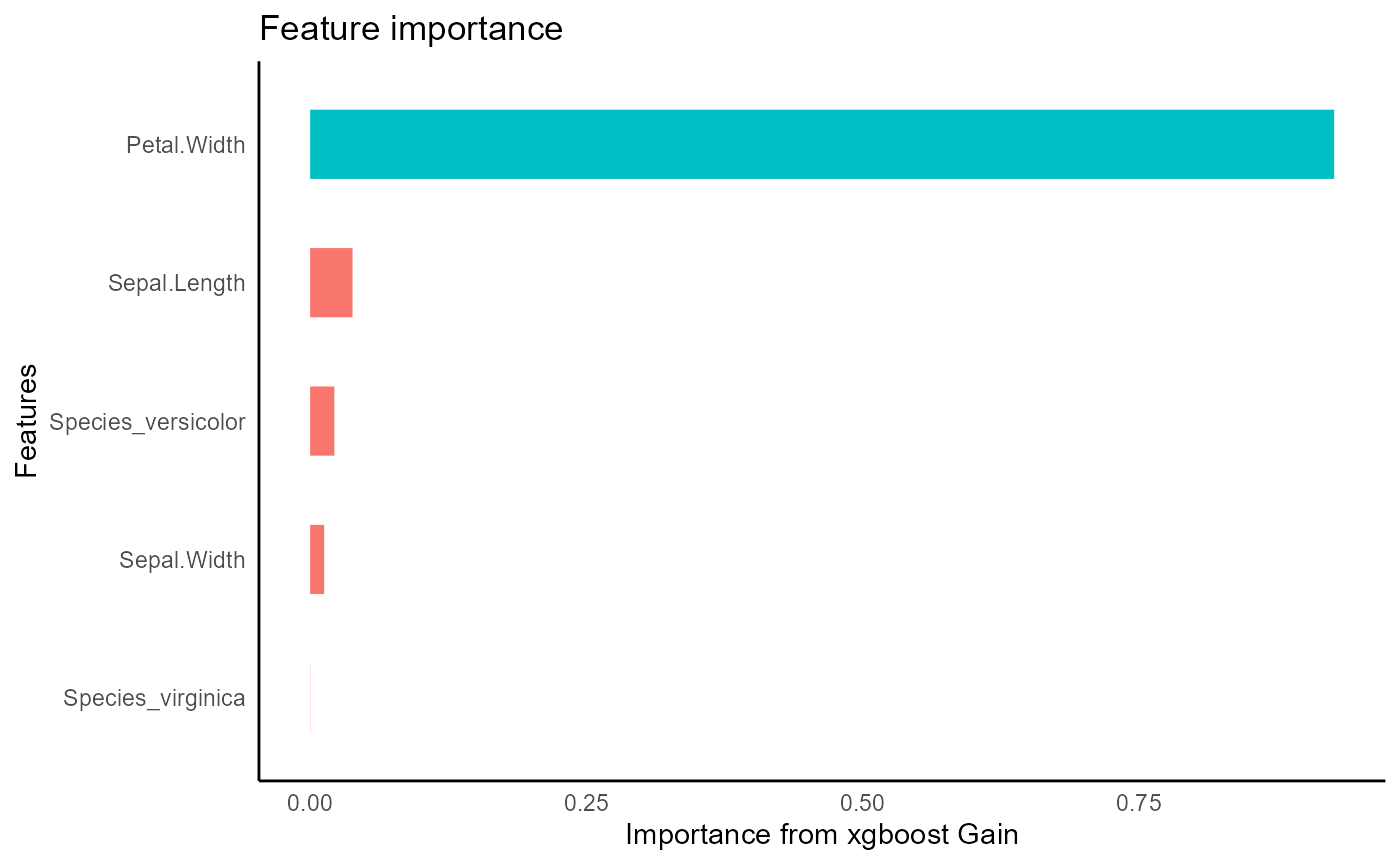

Fit models to the training set using the formula to predict

Petal.Length. Variable importance using gain for each

xgboost model can be visualized.

xgboost with grid search hyperparameter optimization

auto_tune_xgboost returns a workflow object with tuned

parameters and requires some postprocessing to get a trained

xgb.Booster object like tidy_xgboost.

xgboost also can be tuned using a grid that is created

internally using dials::grid_max_entropy. The

n_iter parameter is passed to grid_size.

Parallelization is highly effective in this method, so the default

argument parallel = TRUE is recommended.

iris_train %>%

auto_tune_xgboost(formula = petal_form, n_iter = 5L,trees = 20L, loss_reduction = 2, mtry = 3, tune_method = "grid", parallel = FALSE, counts = TRUE) -> xgb_tuned_grid

#> i Fold1: preprocessor 1/1

#> ✓ Fold1: preprocessor 1/1

#> i Fold1: preprocessor 1/1, model 1/5

#> ✓ Fold1: preprocessor 1/1, model 1/5

#> i Fold1: preprocessor 1/1, model 1/5 (extracts)

#> i Fold1: preprocessor 1/1, model 1/5 (predictions)

#> i Fold1: preprocessor 1/1

#> ✓ Fold1: preprocessor 1/1

#> i Fold1: preprocessor 1/1, model 2/5

#> ✓ Fold1: preprocessor 1/1, model 2/5

#> i Fold1: preprocessor 1/1, model 2/5 (extracts)

#> i Fold1: preprocessor 1/1, model 2/5 (predictions)

#> i Fold1: preprocessor 1/1

#> ✓ Fold1: preprocessor 1/1

#> i Fold1: preprocessor 1/1, model 3/5

#> ✓ Fold1: preprocessor 1/1, model 3/5

#> i Fold1: preprocessor 1/1, model 3/5 (extracts)

#> i Fold1: preprocessor 1/1, model 3/5 (predictions)

#> i Fold1: preprocessor 1/1

#> ✓ Fold1: preprocessor 1/1

#> i Fold1: preprocessor 1/1, model 4/5

#> ✓ Fold1: preprocessor 1/1, model 4/5

#> i Fold1: preprocessor 1/1, model 4/5 (extracts)

#> i Fold1: preprocessor 1/1, model 4/5 (predictions)

#> i Fold1: preprocessor 1/1

#> ✓ Fold1: preprocessor 1/1

#> i Fold1: preprocessor 1/1, model 5/5

#> ✓ Fold1: preprocessor 1/1, model 5/5

#> i Fold1: preprocessor 1/1, model 5/5 (extracts)

#> i Fold1: preprocessor 1/1, model 5/5 (predictions)

#> i Fold2: preprocessor 1/1

#> ✓ Fold2: preprocessor 1/1

#> i Fold2: preprocessor 1/1, model 1/5

#> ✓ Fold2: preprocessor 1/1, model 1/5

#> i Fold2: preprocessor 1/1, model 1/5 (extracts)

#> i Fold2: preprocessor 1/1, model 1/5 (predictions)

#> i Fold2: preprocessor 1/1

#> ✓ Fold2: preprocessor 1/1

#> i Fold2: preprocessor 1/1, model 2/5

#> ✓ Fold2: preprocessor 1/1, model 2/5

#> i Fold2: preprocessor 1/1, model 2/5 (extracts)

#> i Fold2: preprocessor 1/1, model 2/5 (predictions)

#> i Fold2: preprocessor 1/1

#> ✓ Fold2: preprocessor 1/1

#> i Fold2: preprocessor 1/1, model 3/5

#> ✓ Fold2: preprocessor 1/1, model 3/5

#> i Fold2: preprocessor 1/1, model 3/5 (extracts)

#> i Fold2: preprocessor 1/1, model 3/5 (predictions)

#> i Fold2: preprocessor 1/1

#> ✓ Fold2: preprocessor 1/1

#> i Fold2: preprocessor 1/1, model 4/5

#> ✓ Fold2: preprocessor 1/1, model 4/5

#> i Fold2: preprocessor 1/1, model 4/5 (extracts)

#> i Fold2: preprocessor 1/1, model 4/5 (predictions)

#> i Fold2: preprocessor 1/1

#> ✓ Fold2: preprocessor 1/1

#> i Fold2: preprocessor 1/1, model 5/5

#> ✓ Fold2: preprocessor 1/1, model 5/5

#> i Fold2: preprocessor 1/1, model 5/5 (extracts)

#> i Fold2: preprocessor 1/1, model 5/5 (predictions)

#> i Fold3: preprocessor 1/1

#> ✓ Fold3: preprocessor 1/1

#> i Fold3: preprocessor 1/1, model 1/5

#> ✓ Fold3: preprocessor 1/1, model 1/5

#> i Fold3: preprocessor 1/1, model 1/5 (extracts)

#> i Fold3: preprocessor 1/1, model 1/5 (predictions)

#> i Fold3: preprocessor 1/1

#> ✓ Fold3: preprocessor 1/1

#> i Fold3: preprocessor 1/1, model 2/5

#> ✓ Fold3: preprocessor 1/1, model 2/5

#> i Fold3: preprocessor 1/1, model 2/5 (extracts)

#> i Fold3: preprocessor 1/1, model 2/5 (predictions)

#> i Fold3: preprocessor 1/1

#> ✓ Fold3: preprocessor 1/1

#> i Fold3: preprocessor 1/1, model 3/5

#> ✓ Fold3: preprocessor 1/1, model 3/5

#> i Fold3: preprocessor 1/1, model 3/5 (extracts)

#> i Fold3: preprocessor 1/1, model 3/5 (predictions)

#> i Fold3: preprocessor 1/1

#> ✓ Fold3: preprocessor 1/1

#> i Fold3: preprocessor 1/1, model 4/5

#> ✓ Fold3: preprocessor 1/1, model 4/5

#> i Fold3: preprocessor 1/1, model 4/5 (extracts)

#> i Fold3: preprocessor 1/1, model 4/5 (predictions)

#> i Fold3: preprocessor 1/1

#> ✓ Fold3: preprocessor 1/1

#> i Fold3: preprocessor 1/1, model 5/5

#> ✓ Fold3: preprocessor 1/1, model 5/5

#> i Fold3: preprocessor 1/1, model 5/5 (extracts)

#> i Fold3: preprocessor 1/1, model 5/5 (predictions)

#> i Fold4: preprocessor 1/1

#> ✓ Fold4: preprocessor 1/1

#> i Fold4: preprocessor 1/1, model 1/5

#> ✓ Fold4: preprocessor 1/1, model 1/5

#> i Fold4: preprocessor 1/1, model 1/5 (extracts)

#> i Fold4: preprocessor 1/1, model 1/5 (predictions)

#> i Fold4: preprocessor 1/1

#> ✓ Fold4: preprocessor 1/1

#> i Fold4: preprocessor 1/1, model 2/5

#> ✓ Fold4: preprocessor 1/1, model 2/5

#> i Fold4: preprocessor 1/1, model 2/5 (extracts)

#> i Fold4: preprocessor 1/1, model 2/5 (predictions)

#> i Fold4: preprocessor 1/1

#> ✓ Fold4: preprocessor 1/1

#> i Fold4: preprocessor 1/1, model 3/5

#> ✓ Fold4: preprocessor 1/1, model 3/5

#> i Fold4: preprocessor 1/1, model 3/5 (extracts)

#> i Fold4: preprocessor 1/1, model 3/5 (predictions)

#> i Fold4: preprocessor 1/1

#> ✓ Fold4: preprocessor 1/1

#> i Fold4: preprocessor 1/1, model 4/5

#> ✓ Fold4: preprocessor 1/1, model 4/5

#> i Fold4: preprocessor 1/1, model 4/5 (extracts)

#> i Fold4: preprocessor 1/1, model 4/5 (predictions)

#> i Fold4: preprocessor 1/1

#> ✓ Fold4: preprocessor 1/1

#> i Fold4: preprocessor 1/1, model 5/5

#> ✓ Fold4: preprocessor 1/1, model 5/5

#> i Fold4: preprocessor 1/1, model 5/5 (extracts)

#> i Fold4: preprocessor 1/1, model 5/5 (predictions)

#> i Fold5: preprocessor 1/1

#> ✓ Fold5: preprocessor 1/1

#> i Fold5: preprocessor 1/1, model 1/5

#> ✓ Fold5: preprocessor 1/1, model 1/5

#> i Fold5: preprocessor 1/1, model 1/5 (extracts)

#> i Fold5: preprocessor 1/1, model 1/5 (predictions)

#> i Fold5: preprocessor 1/1

#> ✓ Fold5: preprocessor 1/1

#> i Fold5: preprocessor 1/1, model 2/5

#> ✓ Fold5: preprocessor 1/1, model 2/5

#> i Fold5: preprocessor 1/1, model 2/5 (extracts)

#> i Fold5: preprocessor 1/1, model 2/5 (predictions)

#> i Fold5: preprocessor 1/1

#> ✓ Fold5: preprocessor 1/1

#> i Fold5: preprocessor 1/1, model 3/5

#> ✓ Fold5: preprocessor 1/1, model 3/5

#> i Fold5: preprocessor 1/1, model 3/5 (extracts)

#> i Fold5: preprocessor 1/1, model 3/5 (predictions)

#> i Fold5: preprocessor 1/1

#> ✓ Fold5: preprocessor 1/1

#> i Fold5: preprocessor 1/1, model 4/5

#> ✓ Fold5: preprocessor 1/1, model 4/5

#> i Fold5: preprocessor 1/1, model 4/5 (extracts)

#> i Fold5: preprocessor 1/1, model 4/5 (predictions)

#> i Fold5: preprocessor 1/1

#> ✓ Fold5: preprocessor 1/1

#> i Fold5: preprocessor 1/1, model 5/5

#> ✓ Fold5: preprocessor 1/1, model 5/5

#> i Fold5: preprocessor 1/1, model 5/5 (extracts)

#> i Fold5: preprocessor 1/1, model 5/5 (predictions)

xgb_tuned_grid %>%

parsnip::fit(iris_train) %>%

parsnip::extract_fit_engine() -> xgb_tuned_fit_grid

xgb_tuned_fit_grid %>%

visualize_model()

xgboost with default parameters

iris_train %>%

tidy_xgboost(formula = petal_form) -> xgb_base

#> accuracy tested on a validation set

#> # A tibble: 3 × 2

#> .metric .estimate

#> <chr> <dbl>

#> 1 ccc 0.924

#> 2 rmse 0.615

#> 3 rsq 0.916

xgboost with hand-picked parameters

iris_train %>%

tidy_xgboost(petal_form,

trees = 250L,

tree_depth = 3L,

sample_size = .5,

mtry = .5,

min_n = 2) -> xgb_opt

#> accuracy tested on a validation set

#> # A tibble: 3 × 2

#> .metric .estimate

#> <chr> <dbl>

#> 1 ccc 0.972

#> 2 rmse 0.384

#> 3 rsq 0.961

predict on validation set

make predictions

Predictions are iteratively added to the validation data frame. The name of the column is automatically created using the models name and the prediction target.

xgb_base %>%

tidy_predict(newdata = iris_val, form = petal_form) -> iris_val2

#> created the following column: Petal.Length_preds_xgb_base

xgb_opt %>%

tidy_predict(newdata = iris_val2, petal_form) -> iris_val3

#> created the following column: Petal.Length_preds_xgb_optpredictions with eval_preds

Instead of evaluationg these prediction 1 by 1, This step is

automated with eval_preds. This function is specifically

designed to evaluate predicted columns with names given from

tidy_predict.

iris_val3 %>%

eval_preds()

#> # A tibble: 6 × 5

#> .metric .estimator .estimate model target

#> <chr> <chr> <dbl> <chr> <chr>

#> 1 ccc standard 0.980 xgb_base Petal.Length

#> 2 ccc standard 0.978 xgb_opt Petal.Length

#> 3 rmse standard 0.343 xgb_base Petal.Length

#> 4 rmse standard 0.354 xgb_opt Petal.Length

#> 5 rsq standard 0.973 xgb_base Petal.Length

#> 6 rsq standard 0.972 xgb_opt Petal.Lengthget shapley values

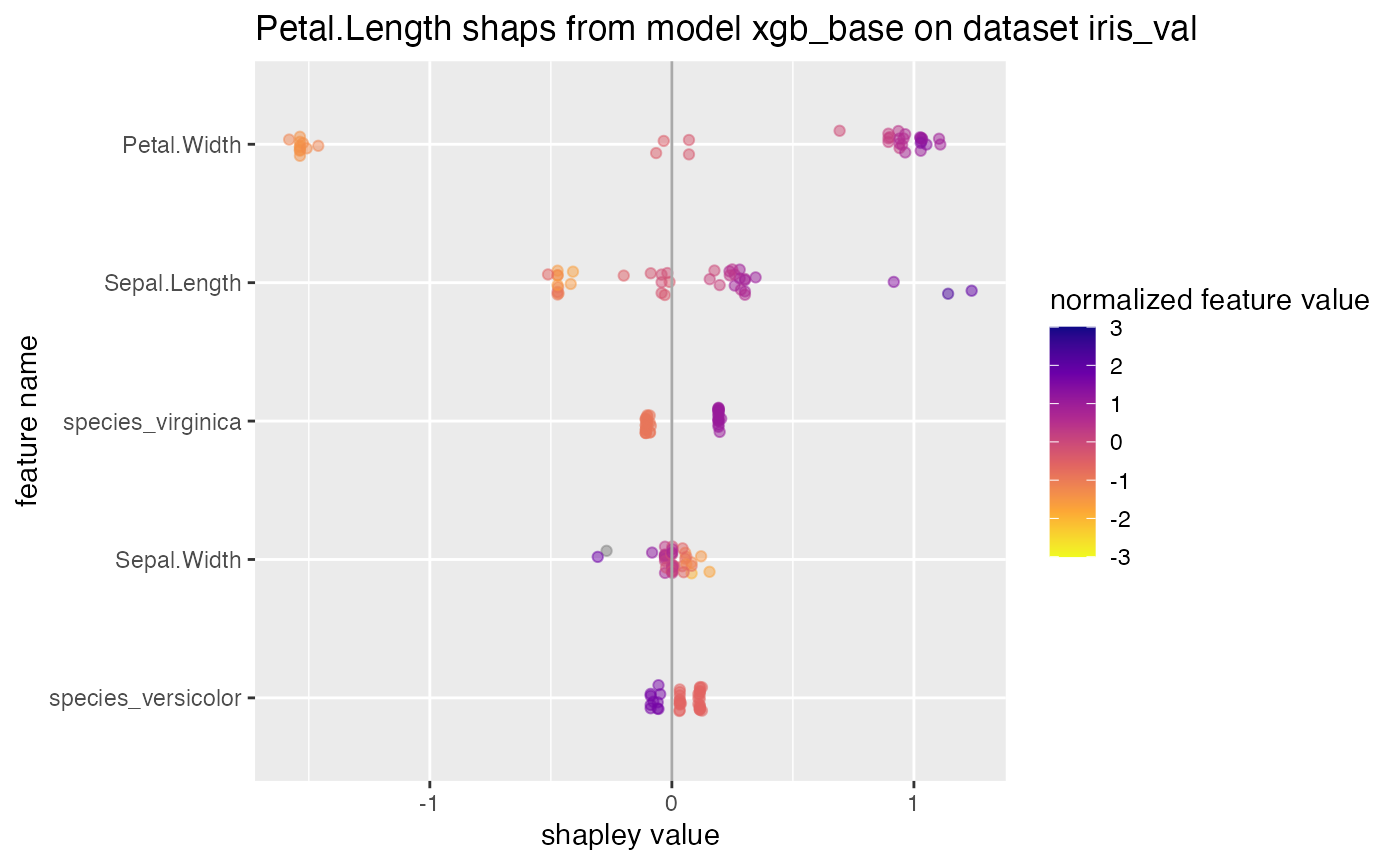

tidy_shap has similar syntax to

tidy_predict and can be used to get shapley values from

xgboost models on a validation set.

shap_list$shap_tbl

#> # A tibble: 38 × 5

#> Sepal.Length Sepal.Width Petal.Width species_versicolor species_virginica

#> <dbl> <dbl> <dbl> <dbl> <dbl>

#> 1 -0.472 -0.0284 -1.54 0.0326 -0.108

#> 2 -0.472 -0.0284 -1.54 0.0326 -0.108

#> 3 -0.0873 -0.270 -1.58 0.0378 -0.108

#> 4 -0.470 -0.0284 -1.52 0.0326 -0.108

#> 5 -0.472 -0.0284 -1.54 0.0326 -0.108

#> 6 -0.418 -0.0817 -1.54 0.0308 -0.107

#> 7 -0.468 -0.0284 -1.46 0.0326 -0.108

#> 8 -0.472 -0.0284 -1.54 0.0326 -0.108

#> 9 -0.472 -0.0284 -1.54 0.0326 -0.108

#> 10 -0.409 0.156 -1.51 0.0326 -0.108

#> # ℹ 28 more rows

shap_list$shap_summary

#> # A tibble: 5 × 5

#> name cor var sum sum_abs

#> <chr> <dbl> <dbl> <dbl> <dbl>

#> 1 Petal.Width 0.950 1.27 5.56 39.4

#> 2 Sepal.Length 0.946 0.193 1.89 13.1

#> 3 species_virginica 0.999 0.0222 1.15 5.43

#> 4 species_versicolor -0.884 0.00600 1.62 3.03

#> 5 Sepal.Width -0.873 0.00699 0.00356 1.83

shap_list$swarmplot

shap_list$scatterplotsunderstand xgboost with other functions from the original package

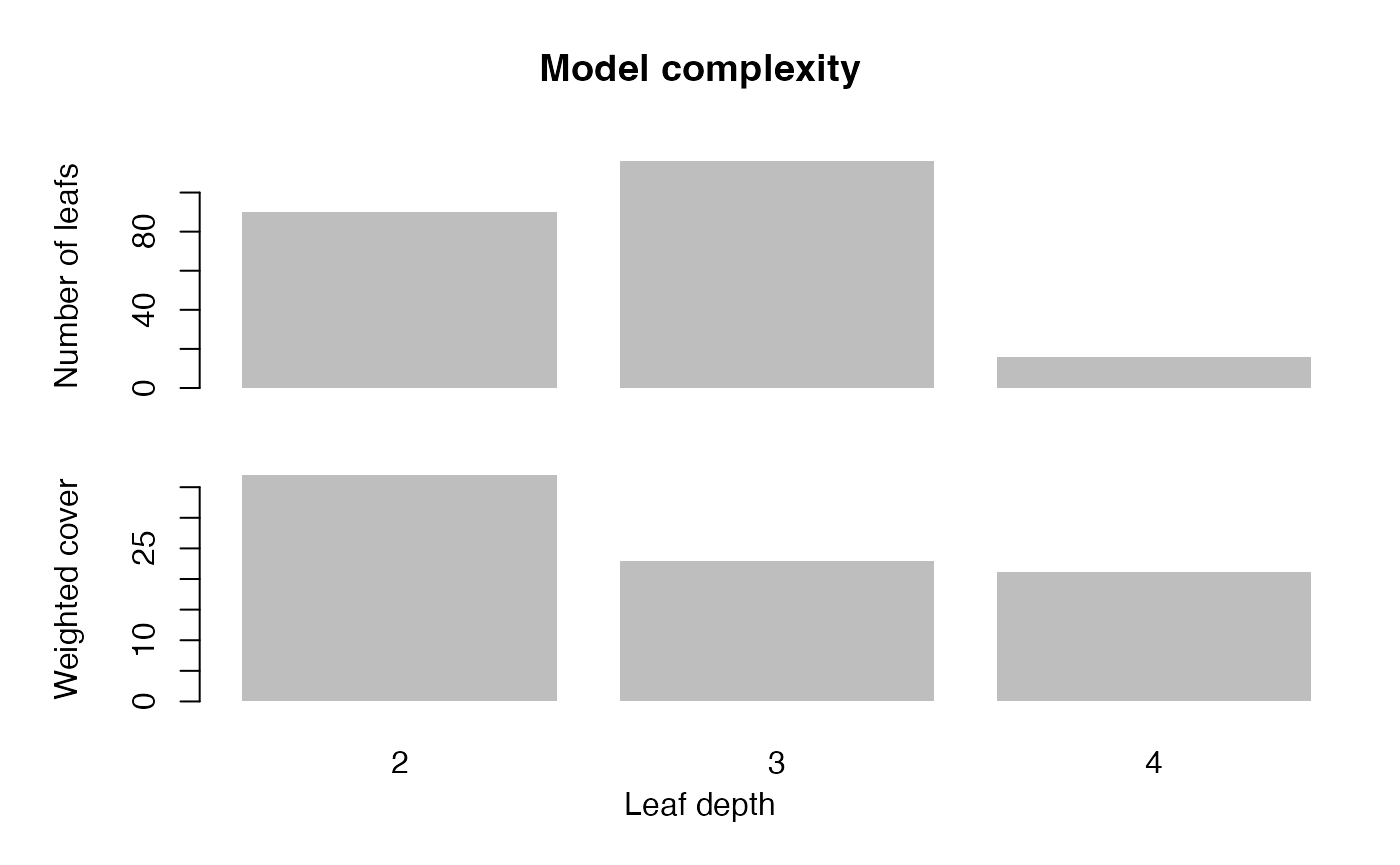

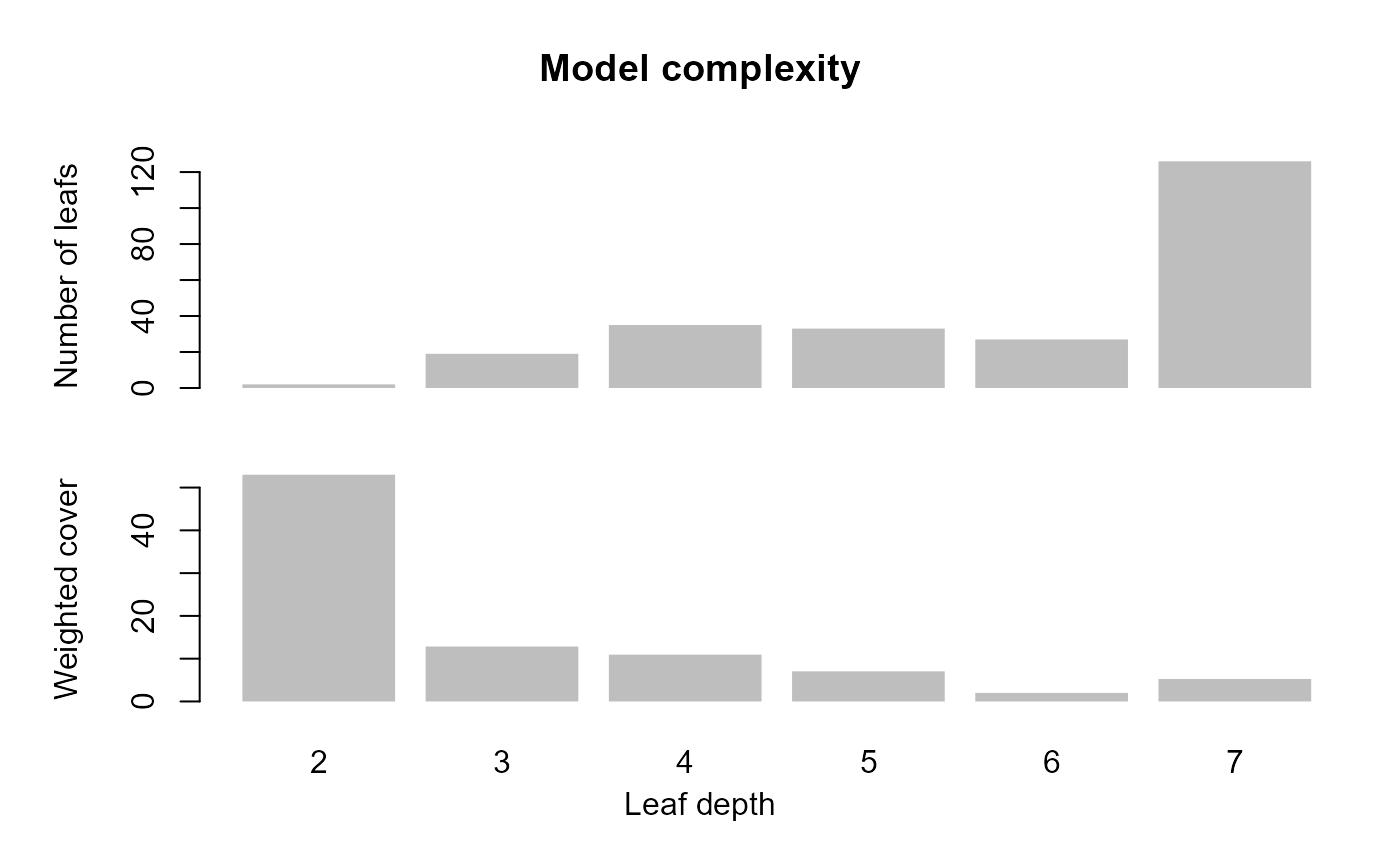

Overfittingin the base config may be related to growing deep trees.

xgb_base %>%

xgboost::xgb.plot.deepness()

xgb_base %>%

xgboost::xgb.plot.deepness() Plot the first tree in the model. The small in terminal leaves suggests

overfitting in the base model.

Plot the first tree in the model. The small in terminal leaves suggests

overfitting in the base model.

xgb_base %>%

xgboost::xgb.plot.tree(model = ., trees = 1)